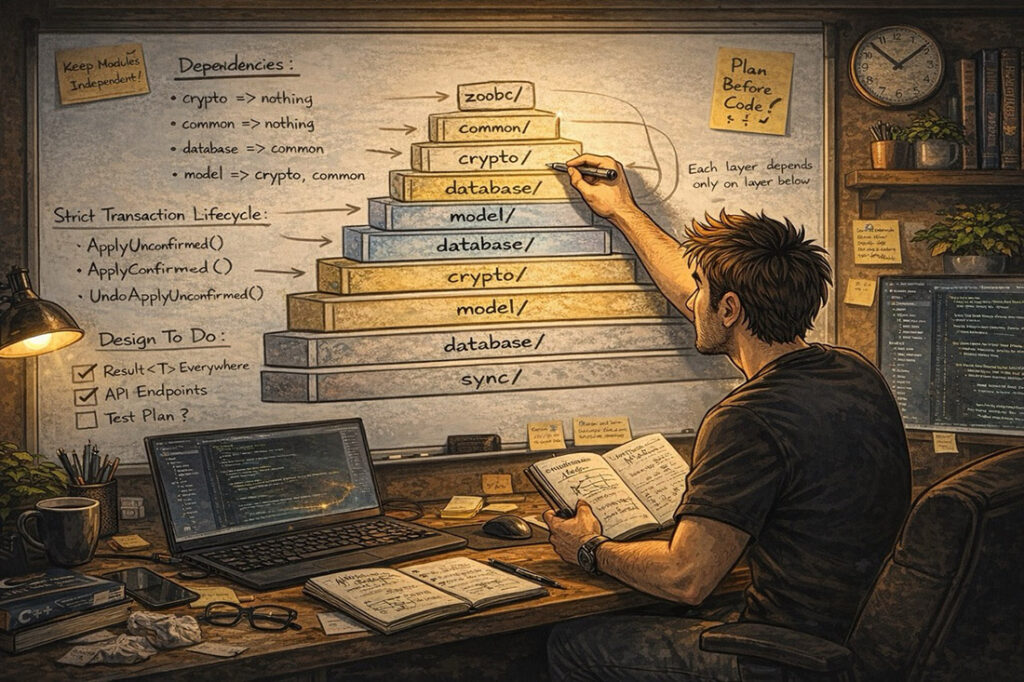

These past two weeks, I have not written a single line of production code.

That might sound strange, especially after the excitement of deciding to rebuild ZooBC, but this time I am deliberately slowing myself down. Every morning, I walk into my lab, close the door of my man cave, sit in front of the whiteboard and the editor, and force myself to think before typing.

This phase is pure design.

No shortcuts, no premature optimizations, no “I’ll fix it later”. Just architecture. The kind of work that does not look impressive on GitHub but determines whether the next years will be productive or miserable.

The architecture document is finally taking shape, and for the first time in a long while, it feels solid.

Defining the Shape of the System

One of the biggest mistakes in the original ZooBC implementation was allowing the structure to emerge organically. At the time, it felt flexible. In hindsight, it was a slow descent into entanglement.

This time, the module structure comes first.

Right now, the project looks like this:

zoobc/

├── common/ # Types, errors, constants

├── crypto/ # Hashing, signatures, RNG

├── database/ # SQLite wrapper with RAII

├── model/ # Block, Transaction, Account, Node

├── transaction/ # Validation, execution, mempool

├── consensus/ # Blocksmith selection, participation scores

├── core/ # Blockchain, block production

├── p2p/ # Networking, fork resolution

├── services/ # Registry, receipts, escrow, snapshots

├── api/ # REST API server

└── sync/ # Fast sync, spine blocks

This structure is not accidental. Each directory exists because it represents a responsibility that must remain isolated over time.

More importantly, every module has explicit dependencies.

The rules are strict:

cryptodepends on nothing.commondepends on nothing.databasedepends only oncommon.modeldepends only oncryptoandcommon.

And so on.

This layered approach is my way of preventing the circular dependencies that completely poisoned the Go version. Back then, changing a single transaction rule meant touching half the codebase. I do not want to ever experience that again.

Every dependency must earn its existence.

Learning From Old Mistakes

While designing this architecture, I keep the old Go code open on a second screen. Not to copy it, but to interrogate it. To ask: why did this become hard to reason about?

One insight stands out above all others: transaction processing was never clearly defined as a lifecycle.

In ZooBC, transactions cannot be treated as simple “apply or reject” operations. They exist in time. They move between states. They can be accepted into the mempool, confirmed in a block, or rolled back when a fork is resolved.

This time, transaction processing is built around a strict three-phase lifecycle:

ApplyUnconfirmed: used when a transaction enters the mempool.ApplyConfirmed: used when the transaction is included in a block.UndoApplyUnconfirmed: used when a fork invalidates previously accepted transactions.

If you get this wrong, transactions leak across forks. Balances drift. State becomes inconsistent in ways that are incredibly hard to debug. I have lived that pain already.

In the C++ architecture, this pattern is not an afterthought. It is baked in from the beginning.

Making Failure Explicit

Another deliberate decision I am making early is how errors are handled.

I am using a Result<T> type throughout the system. Every operation that can fail returns either a value or an error. No exceptions used for control flow. No silent failures. No “this should never happen” comments.

If something can go wrong, it must say so explicitly.

This approach forces discipline. It makes error paths visible. It makes incorrect assumptions uncomfortable. And most importantly, it makes the system easier to reason about when things go wrong, which they always do.

Slow, But Correct

From the outside, these two weeks might look unproductive. No commits that do anything flashy. No demos. No running node yet.

From the inside, this feels like the most important work I have done on ZooBC so far.

I am building the foundation I wish I had built the first time. One that can carry the weight of the protocol without collapsing under its own complexity.

Next comes code. But not yet.

For now, I am still laying the ground on which everything else will stand.