These past two weeks, I stop trusting myself.

That sounds dramatic, but it is exactly the right mindset at this stage. A blockchain implementation cannot be mostly correct. It must be correct everywhere, all the time. One subtle consensus bug is enough to fork the network, split state, and destroy trust permanently.

Hope is not a testing strategy.

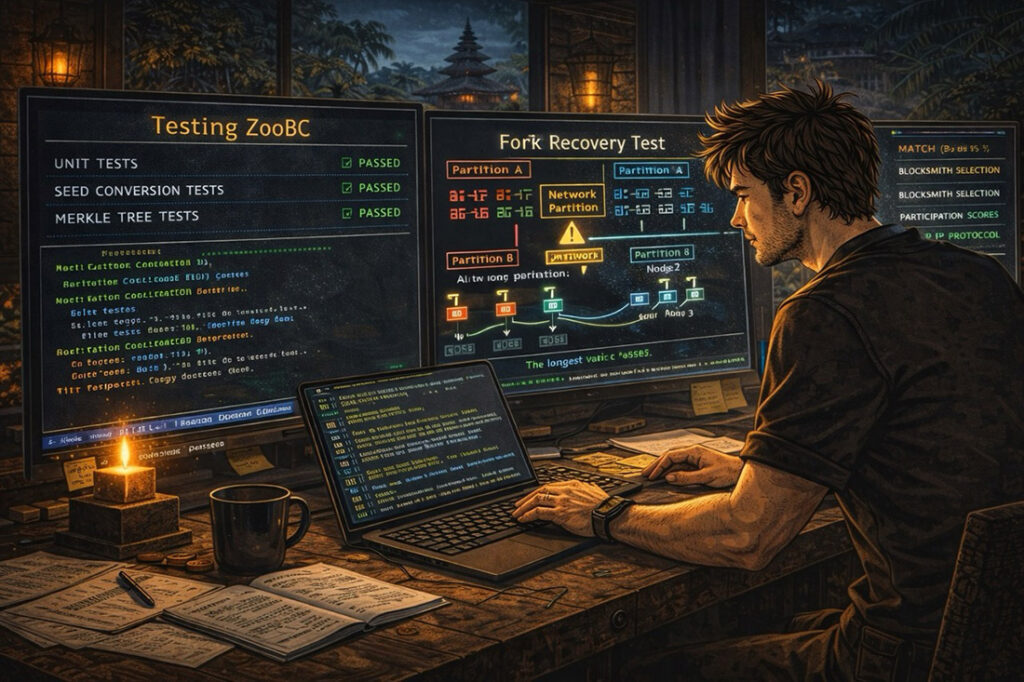

So this phase is about proving, over and over again, that ZooBC behaves exactly as intended.

Testing as a Layered Defense

The testing strategy is deliberately multi-layered. Each layer catches a different class of failure, and together they form something close to confidence.

The first layer is unit testing.

Individual functions are tested in isolation: cryptographic primitives, serialization logic, database operations, deterministic random number generation. These tests are fast and ruthless. They catch obvious bugs early, before they have a chance to hide inside higher-level logic.

This is the easy part.

Verifying Algorithms Against Reality

The second layer is where things get interesting.

For every algorithm that exists in the original Go implementation, I generate test vectors and compare results directly. The same inputs must produce identical outputs. Not equivalent. Identical.

This process catches several subtle issues that would have been painful to debug later:

- Endianness mistakes in seed conversion

- An off-by-one error in merkle tree depth calculation

- A missing field in transaction serialization

None of these would have caused immediate crashes. All of them would have caused silent divergence over time.

This kind of bug is the most dangerous kind.

Integration Testing the Whole System

Once individual pieces behave correctly, the next step is to see if they behave correctly together.

Integration tests spin up multiple nodes locally, connect them into a small network, submit transactions, and observe what happens. Transactions must propagate. Blocks must be produced. State must converge.

This validates the entire stack: networking, mempool logic, block production, database persistence, and consensus rules. When something breaks here, it is usually because two components make slightly different assumptions about reality.

Those differences get resolved immediately, not ignored.

Documenting Comparisons Explicitly

One habit I force myself into this time is documentation.

Every comparison between the Go and C++ implementations is written down, not kept in my head. These documents live under docs/ and act as permanent evidence that things were checked deliberately.

So far, this includes:

blocksmith-selection-comparison.md: Verified MATCH for all selection logicparticipation-score-comparison.md: Verified MATCH for all score calculationsP2P_PROTOCOL_COMPARISON.md: Verified compatibility for all message types

Writing these documents is slow and slightly annoying.

Which is exactly why they are valuable.

Breaking the Network on Purpose

The final layer of testing is intentionally destructive.

Fork recovery tests deliberately create bad conditions:

- Partition the network

- Produce blocks independently on both sides

- Heal the partition

- Observe what happens

The expected outcome is boring: the network must converge to the longest valid chain, every time.

Anything else is unacceptable.

Here, the C++ implementation actually improves on the Go version. Before accepting a fork claim from a peer, the node now verifies that claim with at least three other peers. A single peer is not trusted to dictate reality.

This additional confirmation step makes eclipse attacks significantly harder. A malicious node cannot isolate a victim and convince it to switch to a fake chain without controlling multiple peers.

It is a small change, but a meaningful one.

Confidence Without Complacency

At this point, ZooBC is not just running.

It is resisting.

Resisting bad inputs.

Resisting partial failures.

Resisting malicious behavior.

Late at night, sitting in my lab, I rerun the same tests again, watching everything pass without surprises. That quiet, boring green output is exactly what I want to see.

This is not about perfection.

It is about earning the right to trust the system.